05 July, 2008

One of the best web interfaces for visualizing Subversion repositories (as well as providing

integrated project management and ticketing functionality) is the

Trac Project, and all Cipherdyne software projects

use it. With Trac's success, it also becomes

a target for those that would try to subvert it for purposes such as creating spam. Because

I deploy Trac with the Roadmap, Tickets, and Wiki features

disabled,

my deployment essentially does not accept user-generated content (like comments in tickets

for example) for display. This minimizes my exposure to Trac spam, which has become a

problem significant enough for various spam-fighting strategies to be developed such as

configuring mod-security and a

plugin from the Trac project itself.

Even though my Trac deployment does not display user-generated content, there are still

places where Trac accepts query strings from users, and spammers seem to try and use these

fields for their own ends. Let's see if we can find a few examples. Trac web

logs are informative, but sifting through huge logfiles can be tedious. Fortunately,

simply sorting the logfiles by line length (and therefore by web request length) allows many

suspicious web requests to bubble up to the top. Below is a simple perl script

sort_len.pl that sorts

any ascii text file by line length, and precedes each printed line with the line length

followed by the number of lines equal to that length. That is, not all lines are printed

since the script is designed to handle large files - we want the unusually long lines to

be printed but the many shorter lines (which represent the vast majority of legitimate

web requests) to be summarized. This is an important feature considering that at this

point there is over 2.5GB of log data specifically from my Trac server.

$ cat sort_len.pl

#!/usr/bin/perl -w

#

# prints out a file sorted by longest lines

#

# $Id: sort_len.pl 1739 2008-07-05 13:44:31Z mbr $

#

use strict;

my %url = ();

my %len_stats = ();

my $mlen = 0;

my $mnum = 0;

open F, "< $ARGV[0]" or die $!;

while (<F>) {

my $len = length $_;

$url{$len} = $_;

$len_stats{$len}++;

$mlen = $len if $mlen < $len;

$mnum = $len_stats{$len}

if $mnum < $len_stats{$len};

}

close F;

$mlen = length $mlen;

$mnum = length $mnum;

for my $len (sort {$b <=> $a} keys %url) {

printf "[len: %${mlen}d, tot: %${mnum}d] %s",

$len, $len_stats{$len}, $url{$len};

}

exit 0;

To illustrate how it works, below is the output of the sort_len.pl script used against

itself. Note that at the top of the output the more interesting code appears whereas

the most uninteresting code (such as blank lines and lines that contain closing "}"

characters) are summarized away at the bottom:

$ ./sort_len.pl sort_len.pl

[len: 51, tot: 1] # $Id: sort_len.pl 1739 2008-07-05 13:44:31Z mbr $

[len: 50, tot: 1] printf "[len: %${mlen}d, tot: %${mnum}d] %s",

[len: 48, tot: 1] $len, $len_stats{$len}, $url{$len};

[len: 44, tot: 1] # prints out a file sorted by longest lines

[len: 43, tot: 1] for my $len (sort {$b <=> $a} keys %url) {

[len: 37, tot: 1] if $mnum < $len_stats{$len};

[len: 34, tot: 1] $mlen = $len if $mlen < $len;

[len: 32, tot: 1] open F, "< $ARGV[0]" or die $!;

[len: 29, tot: 1] $mnum = $len_stats{$len}

[len: 25, tot: 1] my $len = length $_;

[len: 24, tot: 1] $len_stats{$len}++;

[len: 22, tot: 2] $mnum = length $mnum;

[len: 21, tot: 1] $url{$len} = $_;

[len: 20, tot: 2] my %len_stats = ();

[len: 19, tot: 1] #!/usr/bin/perl -w

[len: 14, tot: 3] while (<F>) {

[len: 12, tot: 1] use strict;

[len: 9, tot: 1] close F;

[len: 8, tot: 1] exit 0;

[len: 2, tot: 5] }

[len: 1, tot: 6]

Now, let's execute the sort_len.pl script against the trac_access_log file and look

at one of the longest web requests. (The sort_len.pl script was able to reduce

the 12,000,000 web requests in my Trac logs to a total of 610 interesting lines.) This

particular request is 888 characters long, but there were some other similar suspicious

requests that had over 4,000 characters that are not displayed for brevity:

[len: 888, tot: 1] 195.250.160.37 - - [02/Mar/2008:00:30:17

-0500] "GET /trac/fwsnort/anydiff?new_path=%2Ffwsnort%2Ftags%2Ffwsnort

-1.0.3%2Fsnort_rules%2Fweb-cgi.rules&old_path=%2Ffwsnort%2Ftags%2F

fwsnort-1.0.3%2Fsnort_rules%2Fweb-cgi.rules&new_rev=http%3A%2F%2Ff

1234.info%2Fnew5%2Findex.html%0Ahttp%3A%2F%2Fa1234.info%2Fnew4%2F

map.html%0Ahttp%3A%2F%2Ff1234.info%2Fnew2%2Findex.html%0Ahttp%3A%2F

%2Fs1234.info%2Fnew9%2Findex.html%0Ahttp%3A%2F%2Ff1234.info%2Fnew6%2F

map.html%0A&old_rev=http%3A%2F%2Ff1234.info%2Fnew5%2Findex.html%0Ahttp

%3A%2F%2Fa1234.info%2Fnew4%2Fmap.html%0Ahttp%3A%2F%2Ff1234.info%2Fnew2

%2Findex.html%0Ahttp%3A%2F%2Fs1234.info%2Fnew9%2Findex.html%0Ahttp%3A

%2F%2Ff1234.info%2Fnew6%2Fmap.html%0A HTTP/1.1" 200 3683 "-"

"User-Agent: Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1;

Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1) ; .NET CLR

1.1.4322; .NET CLR 2.0.50727; InfoPath.2)"

My guess is that the above request is a bot that is trying to do one of two

things:

1) force Trac to accept the content in the request (which contains

a bunch of links to pages like "http://f1234.info/new/index.html" - note that

I altered the domain so as to not legitimize the original content) and

display it for other Trac users or to search engines, or

2) force Trac

itself to generate web requests to the provided links (perhaps as a way to increase

hit or referrer counts from domains - like mine - that are not affiliated with the

spammer). Either way, the strategy is flawed because the request is against the

Trac "anydiff" interface which doesn't accept user content other than svn revision

numbers, and (at least in Trac-0.10.4) such requests do not cause Trac to issue any

external DNS or web requests - I verified this with tcpdump on my Trac server after

generating similar requests against it.

Still, in all of my Trac web logs, the most suspicious web requests are against

the "anydiff" interface, and specifically against the "web-cgi.rules" file

bundled within the

fwsnort project. But, the requests

never come from the same IP address, the "anydiff" spam attempts never hit any

other link besides the web-cgi.rules page, and they started with regularity

in March, 2008. This makes a stronger case for the activity coming from bot

that is unable to infer that its activities are not actually working (no

surprise there). Finally, I left the original IP address

195.250.160.37

of the web request above intact so that you can look for it in your own web

logs. Although 195.250.160.37 is

not listed

in the

Spamhaus DNSBL service, a rudimentary

Google search indicates that 195.250.160.37 has been noticed before by

other sites as a comment spammer.

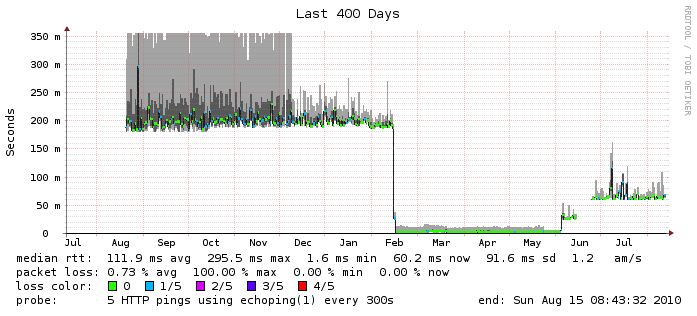

I'm a big fan of the Smokeping

project developed by Tobi Oetiker, and I use it to graph ICMP, DNS, and HTTP round trip

times for my local internet connections and also for cipherdyne.org. Since the beginning

of 2010, I've switched around where cipherdyne.org was hosted from a system at a hosting

provider in Canada to a system running on my home network (serviced by a Verizon FiOS

connection). The drop in RTT's in mid-February was expected and quite large - going

from an average of around 200ms down to about 20ms. Then, in June, I switched to a

different Verizon DSL connection and moved the cipherdyne.org webserver system to a

newer Verizon FiOS connection at a different location, and the RTT's went up a bit to

about 50ms. Here is the graph:

I'm a big fan of the Smokeping

project developed by Tobi Oetiker, and I use it to graph ICMP, DNS, and HTTP round trip

times for my local internet connections and also for cipherdyne.org. Since the beginning

of 2010, I've switched around where cipherdyne.org was hosted from a system at a hosting

provider in Canada to a system running on my home network (serviced by a Verizon FiOS

connection). The drop in RTT's in mid-February was expected and quite large - going

from an average of around 200ms down to about 20ms. Then, in June, I switched to a

different Verizon DSL connection and moved the cipherdyne.org webserver system to a

newer Verizon FiOS connection at a different location, and the RTT's went up a bit to

about 50ms. Here is the graph: